The Dangers of Military-Grade Artificial Intelligence

SOURCE: SOFREP.COM

DEC 13, 2021

Military-grade artificial intelligence will soon be the norm in warfare. And even if the international community agrees to ban it, it may not be enough. The use of Artificial Intelligence (AI) in warfare could have devastating consequences for humanity. AI is now being integrated into military operations to lower human casualties, but what are the risks? What are the implications? How can we be sure that the military-grade AI remains under control? Do we really want to let machines determine who lives and who dies? Maybe…

There are many questions about how AI will affect us. Still, one thing is clear: weaponized military AI is dangerous. Especially in the hands of current Joint Chiefs of Staff leading the Department of Defense, who has trouble downloading the latest smartphone update. Think about that for a moment.

Weaponized military AI is a real threat. It could be used for unethical purposes like causing harm, torture, or violating human rights. The international community is currently debating whether or not the use of military AI should be banned, but that ship has long sailed.

Another big problem, to the smartphone dig above, is that Department of Defense leadership has been accused of not understanding military-grade artificial intelligence. This is because they rely entirely too much on experts instead of conducting research themselves.

This lack of knowledge leaves them at a disadvantage to the broader organization that needs to make important decisions about using AI for military systems.

What is Military-Grade AI?

With artificial intelligence, there are no limits to what can be achieved. AI provides the capability to make decisions and take action without human intervention. As a result, it provides many perceived military advantages.

Artificial intelligence has massively evolved in recent years to the point that it can now be weaponized and used for warfare.

This new technology is called “weapons-grade” or “military-grade” AI because it has been integrated into weapons systems and military operations that have higher capabilities than previously available.

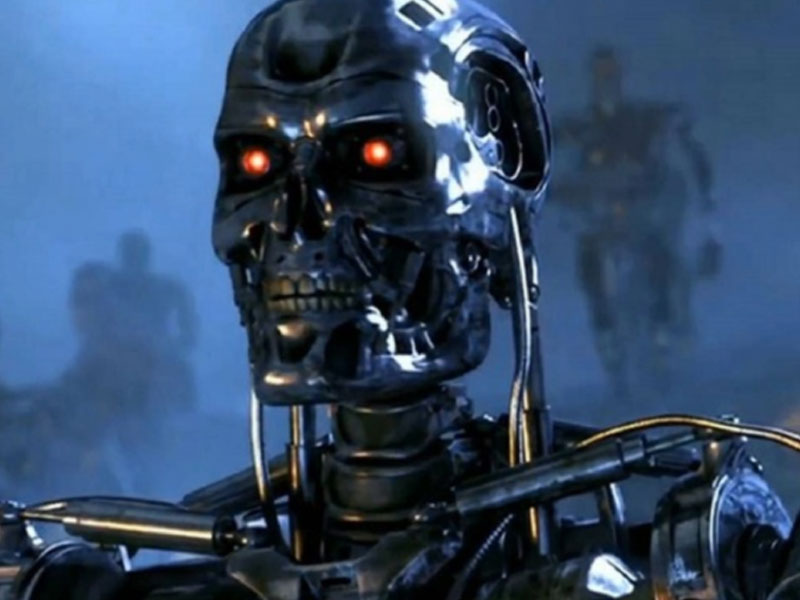

Military-grade AI is often used in autonomous weapons systems that operate independently without human oversight, such as drones and robots. These weapons have greater capabilities than traditional weapons systems but don’t require human control at all times due to the complexity of their programming. This sounds like the infamous Skynet, a fictional artificial neural network-based conscious group mind and artificial general superintelligence system from the movie, Terminator.

But weapons-grade AI is here already and could soon be the norm in warfare. International community agreements could not stop it from happening–but what would happen if these weapons were unleashed on humanity? Surely China, North Korea, Iran, and Russia are developing their own versions of this, which come with many risks to global stability.

The Risks of Weaponized AI

Weaponized AI creates a new form of warfare where one side can use technology to gain a competitive edge. For example, China has been investing heavily in military-grade AI projects and is expected to be the first country to develop autonomous weapons; shortly, these robots could replace human soldiers.

What happens if China is, in fact, first? What does this mean for the rest of the world?

The implications of weaponized AI are enormous, and few countries will be able to avoid them:

- It’s possible that the international community may agree on banning this type of warfare. However, it won’t be enough because other countries may still utilize AI weaponry.

- This creates a situation where there is an “arms race” between countries to stay ahead in the AI arms race. This is happening now but quietly, and why the Editors of SOFREP assigned me this important topic.

- Other countries could be forced into following suit or risk losing their ability to compete militarily if one country does develop weapons with an advantage over current weapons.

- The risks associated with weaponized AI can potentially escalate if other nations see their weapons as ineffective against the new advanced weaponry from their competitors.

Can We Control It?

In the future, we will live in a world dominated by AI. It will be everywhere–AI-based energy systems that give us electricity and AI-powered networks that move our communications and trade.

There’s no guarantee that these systems are safe or controllable. This might be why Elon Musk is pushing for open-source AI, and having some type of global agreement, so the playing field for all humanity is leveled. We think this is an intelligent course of action.

Robots are already being built to replace human labor, but they could also replace humans as their masters. This could lead to what some have called the “technological singularity,” and we’re back in the movie Terminator again.

We’re approaching a time when AI might become too advanced to control–a time where machine intelligence can surpass human intelligence.

This is not just a theory; it has already happened with self-driving cars. Humans can’t even override the car’s controls with autonomous driving technology anymore.

We’re going to have to find ways of preventing weaponized military AI from taking over and becoming uncontrollable. The time for this important conversation is now before the robot cat is out of the bag.

LATEST NEWS

WHAT'S TRENDING

Data Science

5 Imaginative Data Science Projects That Can Make Your Portfolio Stand Out

OCT 05, 2022

Eerily realistic: Microsoft’s new AI model makes images talk, sing

SOURCE: INTERESTINGENGINEERING.COM

APR 20, 2024

80% of AI decision makers are worried about data privacy and security

SOURCE: ARTIFICIALINTELLIGENCE-NEWS.COM

APR 17, 2024

AI Is Set To Change Fertility Treatment Forever

SOURCE: HTTPS://CODEBLUE.GALENCENTRE.ORG/

NOV 06, 2023

AI-empowered system may accelerate laparoscopic surgery training

SOURCE: HTTPS://WWW.NEWS-MEDICAL.NET/

NOV 06, 2023

Here’s Everything You Can Do With Copilot, the Generative AI Assistant on Windows 11

SOURCE: HTTPS://WWW.WIRED.COM/

NOV 05, 2023

Tongyi Qianwen, An AI Model Developed By Alibaba, Has Been Upgraded, And Industry-specific Models Have Been Released

SOURCE: HTTPS://WWW.BUSINESSOUTREACH.IN/

OCT 31, 2023