Hybrid AI-powered computer vision combines physics and big data

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 14, 2023

An illustrated guide to dynamic neural networks for beginners

SOURCE: ANALYTICSINDIAMAG.COM

OCT 10, 2021

In the field of deep learning one subject of research that is emerging rapidly is dynamic neural networks. When we talk about traditional static neural networks we train them with fixed parameters and fix problem-solving skills. But it is well known that the attributes of the input and the environments are changing rapidly in these changing scenarios. So we need something which can change itself automatically according to the input and environment. Here dynamic neural networks are the models which are made with their adapting nature. In this article, we will discuss Dynamic Neural Networks in detail along with their popular categories. The major points to be covered in this article are listed below.

Let’s begin with understanding the context and the dynamic neural networks.

As we know, deep neural networks are emerging to solve various problems like computer vision, natural language processing, and also there are various good models like ResNet, VGG, GoogleNet, etc which are really well-performing models but most of these models work in a static manner. Where computational graphs and the network’s parameters are fixed, which limits their interpretability, efficiency, and representation power. Using the advantages of dynamic neural networks we can overcome these limitations of the static neural networks.

Dynamic Neural networks can be considered as the improvement of the static neural networks in which by adding more decision algorithms we can make neural networks learning dynamically from the input and generate better quality results. The decision algorithms are the improvements that provide power to the network for making more right decisions or computation on the input to obtain a required output more accurately and with representation power. These networks do not work in a fixed direction; they have the capabilities to learn from the environment and input. After learning they can change their work directions which can provide healthy output without performing higher computation and expending higher costs on the computation. Since they have these capabilities we can say they are adaptive according to the situations and the adaptation of the situation is dynamic like they are improving the computation methods on the run time. Which makes us add a ‘dynamic’ word before the neural network. The below section represents the advantages of the dynamic neural network, which will give us more clarity about the Dynamic Neural Networks.

The following are the advantages of dynamic neural networks:-

The dynamic neural networks are categorized into three categories. Let us discuss in detail all these categories one by one.

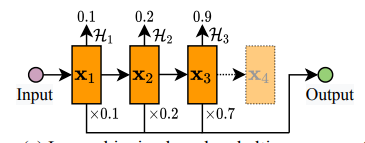

This type of DNNs majorly focuses on setting a network that can allocate computation based on every kind of sample. For example, if the sample is easy to learn for the network it can behave abundantly and accurately by decreasing the computational energy or if the sample is difficult, the network can increase the computation energy for better accuracy. They consist of adapting network parameters with fixed computational graphs so that the redundancy in computation can not increase the cost. The major goal of the network is to increase the representation power with minimal cost.

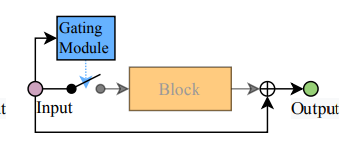

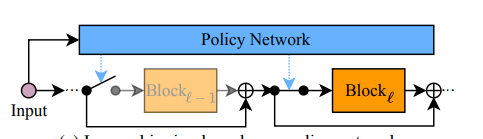

The accelerators on the static models make the computation constant where the network performs the same computation with any kind of data. In dynamic neural networks, the dynamic architecture allows the conditioned computation which can be obtained by adjusting the width and depth of the network or by performing dynamic routing within a supernetwork. Network with dynamic architecture saves the representation power to apply on hard input by preserving the computation on the easy input. If the input is easy we can provide a shallow output that does not require all the layers of the model and by skipping some of the layers we can save the representation power of the skipped layer and the output for easy input will also be accurate.

We can skip the layers in the following ways:-

The above image represents the block architecture of a sample-wise dynamic network with a gate module.

The above image represents a block architecture of a sample-wise dynamic network with a policy network.

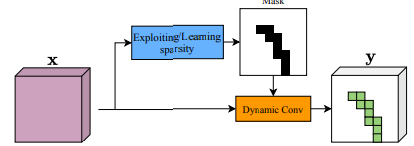

This type of dynamic neural network is basically designed for computer vision problems. As we know in most of the image processing tasks the static models do not take all the pixels of the image in computation. Which is a drawback of static neural networks because the required output from the model becomes shallow and the energy invested by the model is very high. Which is directly connected to the accuracy loss and computational energy loss. Where the spatial-wise dynamic network includes spatially dynamic computation which results in reducing the computational redundancy. In other words, we can say working on only those locations which are responsible for generating the output can give a model higher accuracy with less computational energy.

The spatial-wise dynamic networks are built to adapt the inferences of the different locations from the images. In this type of network, the convolutional layers and filters process the dynamic location according to the location granularity. The adaptive nature of these models is achieved by the depth and width at the pixel level. We can say the models provide a spatial allocation of computation.

These models can be divided into three types according to their work method.

The above image is a representation of the pixel-level dynamic network where the black portion of the image determines the pixel(green) which is required for computation.

The pixel-level dynamic networks require higher levels of computation which can cause a slower speed in the procedure of image processing. Using these models may require accelerating the hardware externally. As an alternative to these models, we can use the region-level dynamic network. Where the model is built for adapting the computation according to the region or patches of the given image as input to the model. Making a model computation adaptive to the region can be done by the transformation of the parameters on a region of the feature map or learning the patch-level dynamic transformation.

The above image is a representation of the region-level dynamic network where the region selection module generates the transformation parameters and the selected region is further processed by the network.

Above discussed methods have the problem of division of feature maps into different areas for making the adaptive computation. Because they are taking pixels or patches of the image into account for computation. In image processing, the resolution is a major factor that can be used in models for better accuracy. Here in this type of model, we try to learn the whole image by processing feature representation with adaptive resolutions. Low-resolution space in the image can be considered as the easy sample and the high-resolution space as the tough sample. Hence we can say that the resolution-level dynamic network exploits the exploit spatial redundancy from the perspective of feature resolution.

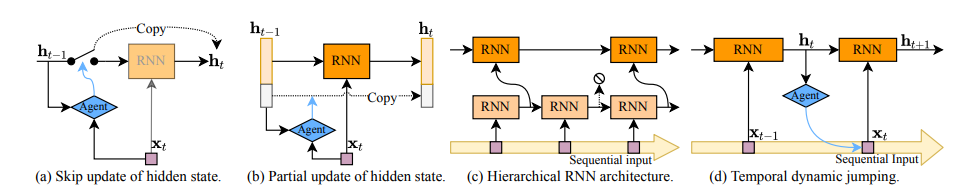

As the name suggests adaptive computation can be performed with data that is temporal or sequential like time series or text data. In the spatial-level dynamic model, we have seen how we make the model’s computational procedures adaptive to the features like pixel patch and resolution of the image. We can also make a model where the computational procedure is adaptive to the sequence of the data where we can define the portion of sequential data that is making any major effect on the result or not making any effect. So that they can be separated and treated differently to achieve the higher accuracy of the model. So if the model is trained for differentiating between the portion of sequential data and also can adapt changes in the data, it can be called a Temporal-Wise dynamic network. These models can be categorized in two ways according to the data they use

The above-given image represents the block diagram of steps for a temporal-wise dynamic network where the first three approaches are dynamically allocating computation in each step by skipping the update, partially updating the state or conditional computation in a hierarchical structure. The agent in (d) decides where to read in the next step.

In this article, we have got an overview of the dynamic neural network where we have seen how we can differentiate the dynamic neural network in three ways. The temporal-wise and spatial-wise dynamic networks are task-specific which can be used for modelling with sequential data and images respectively. Sample-wise dynamic networks can be used for predictive analysis because of their feature of applying adaptive computation on the sample according to the easy and hard samples for saving energy and better representation power. Also, we have seen some of the advantages of dynamic neural networks and how they are helpful in improving overall performances.

LATEST NEWS

WHAT'S TRENDING

Data Science

5 Imaginative Data Science Projects That Can Make Your Portfolio Stand Out

OCT 05, 2022

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 14, 2023

SOURCE: INDIANEXPRESS.COM

OCT 24, 2022