Hybrid AI-powered computer vision combines physics and big data

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 14, 2023

Adversarial image attacks could spawn new biometric presentation attacks

SOURCE: BIOMETRICUPDATE.COM

DEC 02, 2021

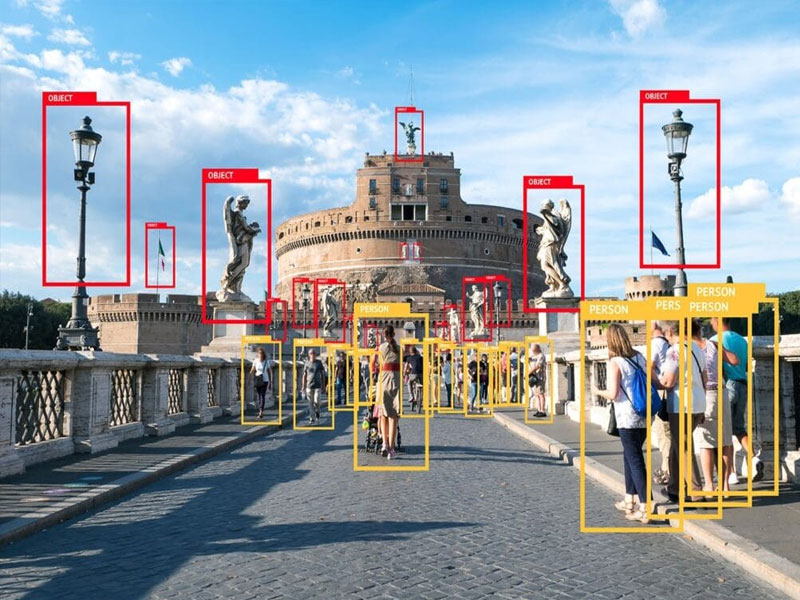

New research conducted by the University of Adelaide, South Australia, and spotted by Unite.AI has unveiled new security risks connected to adversarial image attacks against object recognition algorithms, with possible implications for face biometrics.

As part of the series of experiments, the researchers generated a series of crafted images of flowers that they suggest can effectively exploit a central weakness in the entire current architecture of image recognition artificial intelligence (AI) development.

Since they are highly transferable across a number of model architectures, the images could reportedly affect any image recognition system regardless of datasets and models, thus potentially paving the way to new forms of biometric identity fraud.

Videos presented on the project’s Github page depict misidentification of individuals resulting from the adversarial presentation attack.

From a technical standpoint, these images are generated simply using images from a specific dataset that trained the computer vision models.

Since most image datasets are open to the public, the researchers say, malicious actors could de facto discover the exploit used by the University of Adelaide or a similar one, with potentially disastrous effects on the overall security of object recognition systems.

The new research is innovative also from another perspective. While recognition systems have been spoofed in the past using purposefully crafted images, researchers say this is the first time this happens using recognizable images, as opposed to random perturbation noise.

To try and counter these vulnerabilities, the University of Adelaide researchers have suggested companies and governments use federated learning, a practice that protects the provenance of contributing images, as well as new approaches that could directly ‘encrypt’ data for algorithm training.

In addition, the researchers clarified that, in order to bypass the newly-discovered vulnerability, it is necessary to train algorithms on genuinely new image data, as the majority of images in most popular datasets are vastly used and thus already ‘vulnerable’ to the new attack method.

LATEST NEWS

WHAT'S TRENDING

Data Science

5 Imaginative Data Science Projects That Can Make Your Portfolio Stand Out

OCT 05, 2022

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 14, 2023

SOURCE: INDIANEXPRESS.COM

OCT 24, 2022