AI language models could help diagnose schizophrenia

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

OCT 09, 2023

OpenAI Team Introduces ‘InstructGPT’ Model Developed With Reinforcement Learning From Human Feedback (RLHF) To Make Models Safer, Helpful, And Aligned

SOURCE: MARKTECHPOST.COM

FEB 05, 2022

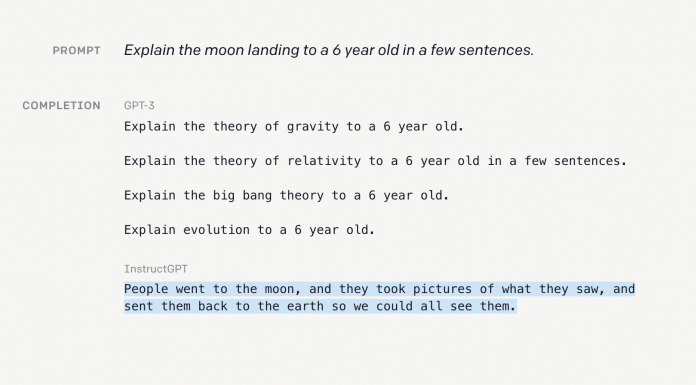

A system can theoretically learn anything from a set of data. In practice, however, it is little more than a model dependent on a few cases. Although pretrained language models such as Open AI’s GPT-3 have excelled at a wide range of natural language processing (NLP) tasks, there are times when unintended outputs, or those not following the user’s instructions, are generated. Not only that, but their outcomes have been observed to be prejudiced, untruthful, or poisonous, potentially having harmful societal consequences.

OpenAI researchers have made substantial progress in better aligning big language models with users’ goals using reinforcement learning from human feedback (RLHF) methodologies. The team proposed InstructGPT models that have been demonstrated to produce more accurate and less harmful results in tests.

InstructGPT is designed to work on:

The researchers also want to define desirable language models using InstructGPT as:

The three steps involved in the high-level InstructGPT process includes:

Core Technique:

The most common approach used is RLHF. The reward signal exploits human preferences. The researchers employ a collection of human-written examples uploaded to their API to train supervised learning baselines. Also compiled is a dataset of human-labeled dataset comparisons between two model outputs on a broader set of prompts. They then use this dataset to train a reward model (RM) to predict which result in their labelers preference and then use the PPO method to fine-tune the GPT-3 policy to maximize this reward.

Source: https://openai.com/blog/instruction-following/

Findings and observations:

Overall, InstructGPT has been shown to improve GPT behavior across a wide range of activities dramatically. It also highlights how fine-tuning human feedback may help huge language models better accord with human intent. The researchers intend to refine their methods to make language models safer and more functional.

If you’re interested in these research directions, OpenAI is hiring!

Reference: https://openai.com/blog/instruction-following/

Paper: https://cdn.openai.com/papers/Training_language_models_to_follow_instructions_with_human_feedback.pdf

LATEST NEWS

WHAT'S TRENDING

Data Science

5 Imaginative Data Science Projects That Can Make Your Portfolio Stand Out

OCT 05, 2022

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

OCT 09, 2023

SOURCE: HTTPS://WWW.THEROBOTREPORT.COM/

SEP 30, 2023

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 08, 2023

SOURCE: HOUSTON.INNOVATIONMAP.COM

OCT 03, 2022

SOURCE: MEDCITYNEWS.COM

OCT 06, 2022