CMU Researchers Introduce the Open Whisper-Style Speech Model: Advancing Open-Source Solutions for Efficient and Transparent Speech Recognition Training

SOURCE: HTTPS://WWW.MARKTECHPOST.COM/

OCT 03, 2023

Researchers at Watson College creating machines that think

SOURCE: BINGHAMTON.EDU

JUN 25, 2022

When most people think of autonomous systems, they often imagine self-driving cars, but that’s just part of this wide area of research. Creating a machine that can make its own decisions in real time involves a dazzling array of challenges, ranging from robotics to software design, coding, mathematics, systems science and more.

Assistant Professor of Mechanical Engineering Kaiyan Yu offers a definition that captures the field’s breadth: “Autonomous systems are capable of carrying out a complex series of actions automatically. It’s a cross-disciplinary field, involving computer science, mechanical engineering, electrical engineering and even the cognitive sciences.”

Systems can be semi- or fully autonomous and involve a wide range of applications, from agriculture to healthcare management to control of traffic lights in smart cities and much more, Yu says.

In fact, autonomous systems are already part of our daily life, from thermostat control systems in smart homes to cellphone chatbots, Assistant Professor of Computer Science Shiqi Zhang points out. Quite a few Binghamton University alumni work at financial technology companies in New York City building autonomous systems — Zhang prefers the term “robot,” broadly defined — for investment management and other tasks, for example.

Here, you’ll meet Watson College researchers who are tackling complex problems and laying the foundation for a high-tech future where machines can think, make decisions and, yes, drive the car.

PhD students Kishan Chandan, left, and Vidisha Kudalkar work in Assistant Professor Shiqi Zhang’s Autonomous Intelligent Robotics Lab in the Engineering Building at Watson College. Image Credit: Jonathan Cohen.

Zhang works with artificial intelligence (AI) algorithms and neural networks, a type of data-driven machine learning. Ultimately, his research teaches machines to learn, reason and act — and even out-compete humans in certain tasks.

In his research group, Zhang and his students focus on everyday scenarios, such as homes, hospitals, airports and shopping centers. Their work also focuses on three research themes: robot-decision making, human-robot interaction and robot task-motion planning. In their decision-making research, supported through a National Science Foundation grant, they develop algorithms that combine rulebased AI methods and data-driven machine learning methods.

“The former is good at incorporating human knowledge, and the latter makes it possible to improve decisionmaking from past experience,” Zhang says.

To make rational decisions, an autonomous system must figure out the current world state, a quality that researchers term “perception.” Reasoning helps a robot figure out what it should do once it perceives that state. With actualization, the robot completes the decided-upon behavior. AI methods have been used in all three processes, but they are most successful — at least so far — when it comes to perception.

“AIs can do better than people on benchmark tasks, such as image recognition and speech recognition, under some conditions. A robot can know the world state much, much better than 10 years ago,” Zhang says. “That definitely helps the robot a lot with decision-making and reaching the next level of autonomy.”

That doesn’t mean that robots will surpass humans in decision-making anytime soon. Humans, for example, realize that someone can’t be in two places at the same time, and that dropped objects fall to the ground. AIs need to be taught to factor these realities into their reasoning processes.

“Machines don’t have common sense. There are many things that are obvious to people, but not to AIs,” Zhang reflects. “Data-driven methods are not very effective in incorporating common sense. We don’t want a self-driving car to learn to avoid running into people by doing that many times.”

When it comes to human-robot interaction, the research group is interested in dialog-based and augmented reality (AR)-based systems. Dialog systems help robots estimate a human’s mental state, and AR systems visualize a robot’s mental state for human decision-making, Zhang says. Long-term, he would like to see more research on the creation of transparent and trustworthy AI systems, and the use of this technology to improve the lives of physically challenged and elderly people.

“I’d like to see robots outside labs more, and see people and robots work with each other in human-inhabited environments. I’d like to see a robot being able to make sense of its own behaviors,” he says.

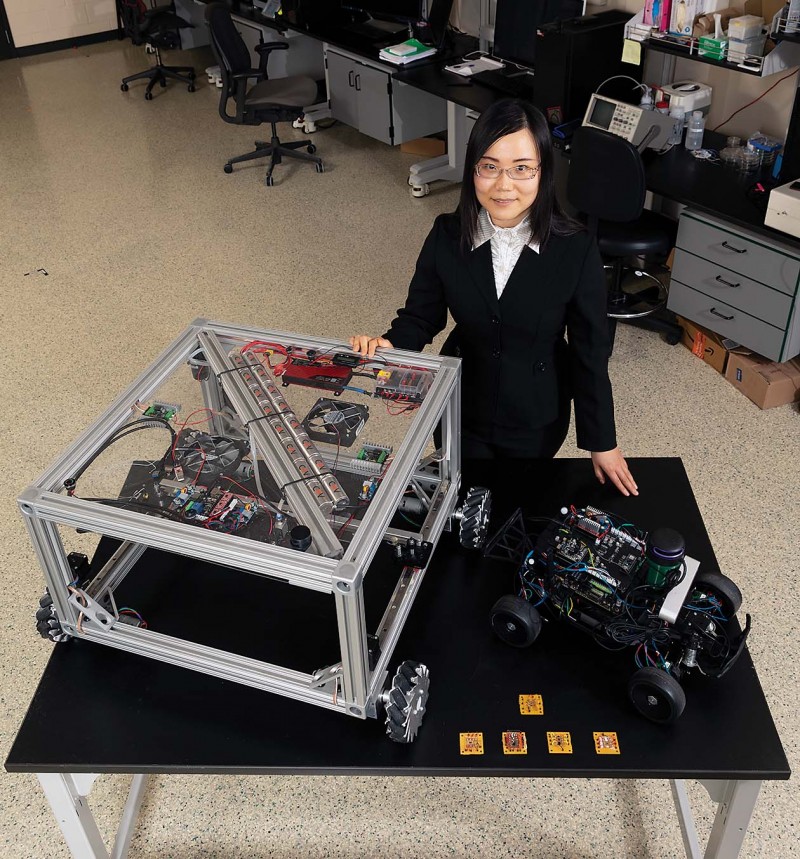

Assistant Professor Kaiyan Yu is working on robotics projects both large and microscopic. Image Credit: Jonathan Cohen.

Tiny robots that travel in your bloodstream, conducting repairs and delivering medications to their target. Massive robots that prowl the roads, finding cracks before your car’s front wheel does.

Yu is working to develop smart systems on both the micro- and macro-scale of robotics. The potentials of both are vast.

On the micro-scale, she researches the manipulation of nanoparticles, a precursor to more fully realized nanobots that could be used in a wide range of applications, from minimally invasive surgery and drug-delivery to industrial use in microelectronics assembly and packaging.

This spring, she received a $588,608 National Science Foundation CAREER Award to explore how to control multiple nanosized objects using electric fields. Fully realized nanobots are still a way off; scientists first need to develop and fabricate small-scale actuators, sensors and communication devices, a necessary step for automation.

“I want to precisely, efficiently and automatically control and manipulate as many of these tiny particles as possible so that I can use them to assemble different functional nanodevices, interconnects and other useful components in various applications,” she says.

Yu also explores larger-scale problems, such as the dangers of driving on icy roads. Using a scaleddown car, she’s developing an algorithm that can detect slippery road surfaces and prevent skidding.

And then there is the surface crack repair robot, which glides over a road surface to detect and repair cracks without human guidance.

Some people may fear that a robotic future could lead to job losses, she acknowledges. Instead, she points to the positives: Robots will be able to tackle repetitive and dangerous work, freeing human beings to engage in more meaningful pursuits.

“I hope that robots can benefit human beings. They can actually create new job opportunities, since robots need to be designed and maintained,” she says. “Robots will be more perceptive and dexterous. Robots can work with humans, interacting and collaborating with them.”

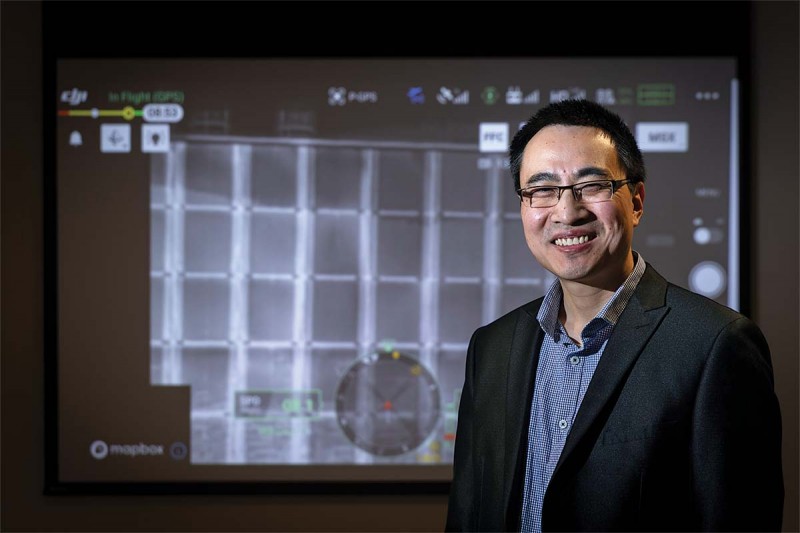

Assistant Professor Yong Wang is working on making driving safer in India. Image Credit: Jonathan Cohen.

Every year around the world, about 1.3 million people die in traffic fatalities, and millions more suffer nonfatal injuries that lead to long-term disability.

India ranks first in the number of road accident deaths, accounting for around 10% of the global total. Worldwide, 90% of casualties happen in developing countries where the laws of the road may be seen as merely inconvenient suggestions. And among all the states in India, Tamil Nadu — located at the southeastern tip of the country — reports the highest number of road accidents.

Not coincidentally, when Binghamton University and Watson College established a collaborative Center of Excellence in 2019 with the Vellore Institute of Technology (VIT) in Tamil Nadu, they focued on autonomous vehicle research.

For Indians, finding a safer way to navigate the streets is a matter of life and death.

Through the Watson Institute for Systems Excellence (WISE), Assistant Professor of Systems Science and Industrial Engineering Yong Wang is working with VIT Professor P. Shunmuga Perumal on ways to overcome the challenges, which include inconsistent lane markings; lack of traffic lights and pedestrian crossings; and the unique mix of trucks, cars, two- and three-wheeled vehicles, bicycles and autorickshaws. Researchers drove around Vellore and collected video that could be analyzed using a deep-learning algorithm.

“By combining an object detection network and a lane detection network with a system that ties everything together, we came up with eight different road scenarios corresponding to a different command to the vehicle control system,” Wang says.

Wang has other ideas he’s exploring. One industry-sponsored project would check warehouse inventory after hours with indoor drones. Another would fly drones above parking areas to monitor open spots and report to drivers on a smartphone app. He also advised a senior project group using drones to check solar panels.

“As drones get cheaper, everybody’s trying to come up with new ways of utilizing them, not only for videos but maybe for prescription or grocery delivery,” he says. Drone regulation is the next step so that they don’t interfere with airports and military bases: “Technology is moving much faster than the policy-making process, but I think the policies will gradually catch up.”

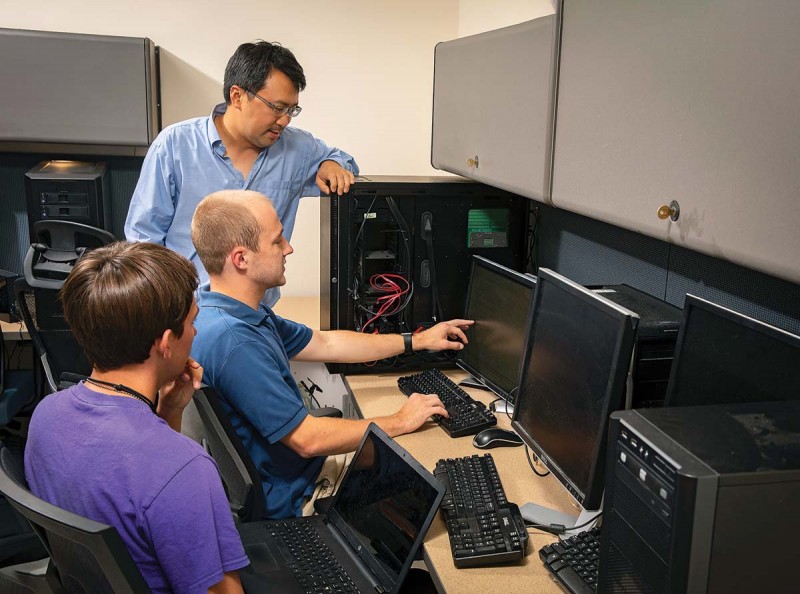

Professor Yu “David” Liu discusses ideas in his lab with students Jeffery Eymer, center, and David Fletcher. Image Credit: Jonathan Cohen.

Professor of Computer Science Yu “David” Liu wants to make autonomous systems safer, and that means a deep look into the software that drives them.

Without software, a car or drone is simply a collection of metal and plastic. Programs such as autopilot make the decisions that drive the vehicle, from where it’s headed to how fast it’s going and what obstacles lie in its path.

Because of this, safety must become a major part of software design for autonomous systems. Otherwise, consequences could be catastrophic and expensive: a self-driving car that can’t detect a building, for example, or a drone falling from the sky in a crowded area. Routinely deployed in challenging terrain, unmanned aerial vehicles (UAV) and unmanned underwater vehicles (UUV) may be difficult to reclaim if they fail to return on their own.

“We are developing programming tools so that an autopilot program can be developed in a ‘safeby-design’ manner. We also study how existing programs written for autonomous systems can be verified and/or tested,” he says.

Much of the research in autonomous systems is algorithm-centric, focused on designing decision-making procedures for navigation or the detection of obstacles. Another direction is a systems-centric view, which looks at energy efficiency, performance, programmability, timeliness and machine learning capability, as well as safety. In 2011, Liu received a $479,391 NSF CAREER Award to explore “greener” software for both large-scale data centers as well as personal devices such as laptops and smartphones.

He would also like to see more research on the intersection of the field with laws, regulations and public policies.

“This is an emerging area for computer scientists and social scientists to collaborate in. For example, it will be exciting to see what regulations can be automatically enforced and verified by the software developed for autonomous systems,” he says. “Data collected from operating autonomous systems may offer insight to policymakers through machine learning, so that regulations are data-driven.”

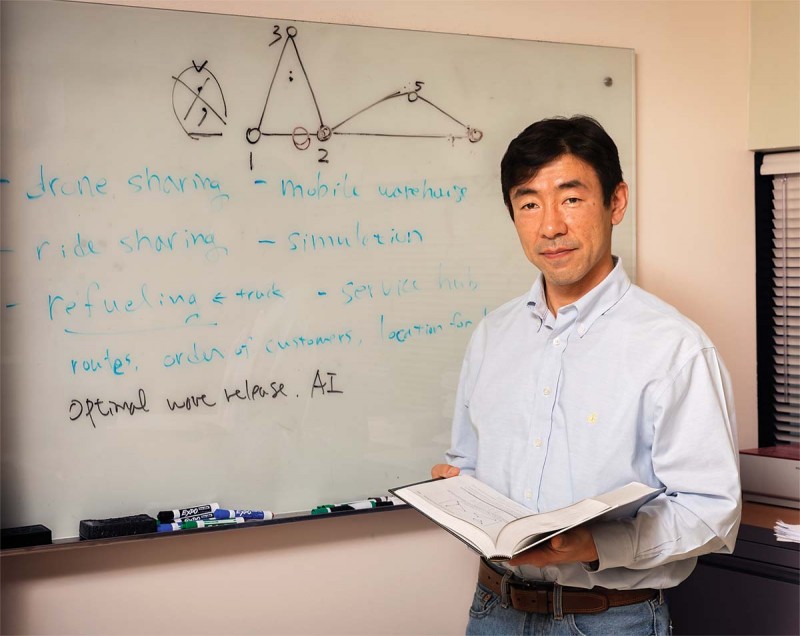

Assistant Professor Sung Hoon Chung calculates optimum routes for autonomous vehicles. Image Credit: Jonathan Cohen.

Like most travelers, you program your self-driving car to reach your destination as quickly as possible. The problem: Everyone else is doing the same thing, potentially creating traffic tie-ups on well-traveled roads. Plus, your car is electric and will need to recharge somewhere along the way. How will you — or your smart car — know which roads to take?

That’s where Assistant Professor of Systems Science and Industrial Engineering Sung Hoon Chung comes in. His research centers on complex, large-scale problems involving multiple agents and limited resources over time, using mathematical optimization to address conditions of uncertainty.

“If drivers want to reduce their travel time, it may not be optimal to choose the shortest path. From the systems perspective, what you want to do is minimize the total travel time of all the users,” Chung explains.

That involves changing the behavior of individuals to optimize the system. For example, a heavily traveled road might institute higher tolls during rush hour, reducing congestion and leading thrifty travelers to pick other routes.

Reducing congestion also reduces the possibility of crashes, Chung acknowledges. But there’s a sweet spot when it comes to pricing: If the toll is too low or too high, it won’t be as effective in reducing congestion, because too few or too many people would choose other roads.

Systems science analyzes these complex factors and how they interact. Coupled with autonomous systems and tools such as mathematical optimization, data mining and machine learning, scientists can solve logistical problems in real time — adjusting tolls or rerouting vehicles, for example. Ultimately, Chung’s research may help pave the way for smart cities, in which resources are automatically adjusted to sustainably and efficiently meet the community’s needs.

A few years ago, Chung studied the optimal placement of charging stations for electric cars, one of the major obstacles to the widespread adoption of this technology. He focused on how to find the optimal locations for charging stations that could accommodate limited budgets and long-term plans. It could be a game-changer, and so too could be his most recent line of research: autonomous delivery drones, which could be used for a wide range of applications, including the delivery of crucial medicines to disaster areas.

The drones would be based on trucks — also in motion — where they would return after making deliveries to recharge, and then return to the skies for another round. Ultimately, such delivery systems could reduce the number of vehicles on the ground, relieving traffic congestion while making the same number of deliveries as traditional methods.

“We focus on how we can optimally manage the use of the drones and trucks, and move their optimal routes to minimize flight time,” he says. “It’s very similar to the electric car research because drones also use batteries and have limited range, so they can’t be used for very long missions.”

Posted in: Science & Technology, Watson

LATEST NEWS

Augmented Reality

Hi-tech smart glasses connecting rural and remote aged care residents to clinicians

NOV 20, 2023

WHAT'S TRENDING

Data Science

5 Imaginative Data Science Projects That Can Make Your Portfolio Stand Out

OCT 05, 2022

SOURCE: HTTPS://WWW.MARKTECHPOST.COM/

OCT 03, 2023

SOURCE: HTTPS://NEWS.MIT.EDU/

AUG 17, 2023

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 21, 2023

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 17, 2023

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 07, 2023

SOURCE: HTTPS://WWW.INDIATODAY.IN/TECHNOLOGY/NEWS/STORY/69-MILLION-GLOBAL-JOBS-TO-BE-CREATED-IN-NEXT-FIVE-YEARS-AI-AND-MACHINE-LEARNING-ROLES-TO-GROW-IN-INDIA-2367326-2023-05-02

JUN 28, 2023