White Castle to deploy voice-enabled digital signage in US

SOURCE: HTTPS://WWW.VERDICTFOODSERVICE.COM/

OCT 04, 2023

NVIDIA’s latest speech synthesis research makes AI voices more expressive and realistic

SOURCE: MARKTECHPOST.COM

SEP 01, 2021

Source: NVIDIA

Advanced AI models have transformed many natural language processing tasks. Speech synthesis is one such task that involves the artificial production of human speech. Synthesized voice has evolved from the monotone of robocalls to the polished tone of virtual assistants in smartphones and smart speakers, all thanks to artificial intelligence.

However, there is still a distinction between AI-generated speech and the human speech we hear in daily life and the media. This is because human speech has a complex rhythm, intonation, and timbre that AI cannot replicate.

NVIDIA is constantly engaged in developing breakthrough models and tools for high-quality, controllable speech synthesis that capture the richness of human speech without audio artifacts. They are showcasing their latest projects at the Interspeech 2021 conference, the World’s Largest Event on the Science and Technology of Spoken Language Processing. More than 1000 researchers present ground-breaking speech technology research on this platform. NVIDIA Research will present its conversational AI model architectures and fully prepared voice datasets for developers at this week’s conference.

Developers and creators can use these models to generate voices for characters, virtual assistants and personalized avatars. NVIDIA’s in-house creative team is even using the technology to create emotional narration for a video series about AI’s capability. Some of this cutting-edge work has been released open-source through the NVIDIA NeMo toolkit, optimized to run efficiently on NVIDIA GPUs.

NVIDIA researchers demonstrate ground-breaking speech synthesis models in their “I AM AI video series”, which features global AI innovators reshaping every industry imaginable.

However, these videos have initially been narrated by a human. It was noticed that the previous speech synthesis models had restricted control over the tempo and pitch of a synthetic voice. Due to this, attempts at AI narration failed to induce the emotional response in viewers that a skilled human speaker could.

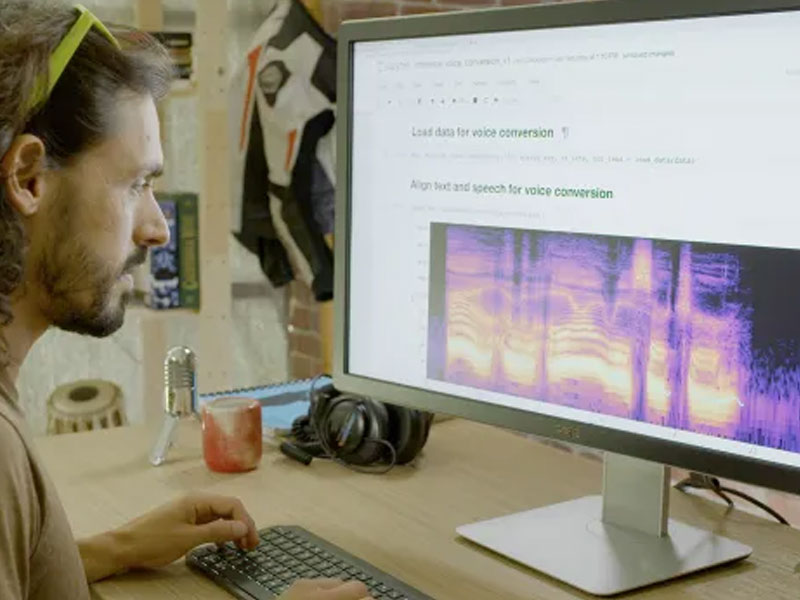

NVIDIA’s controllable speech synthesis models like RAD-TTS can convert any text prompt into the speaker’s voice by training the text-to-speech model with audio of an individual’s speech.

Voice conversion is another of its functions, in which one speaker’s words (or even singing) are delivered in the voice of another speaker. The RAD-TTS interface, which was inspired by the idea of the human voice as a musical instrument, allows users to fine-tune the synthesized voice’s pitch, duration, and energy at the frame level.

One of its use cases can be described when a video producer may use this interface to record himself reading the video script and then use the AI model to translate his speech into the female narrator’s voice. The producer might then direct the AI like a voice actor, altering the synthesized speech to accent keywords and changing the speed of the narration to portray the video’s tone better, using this baseline narration.

AI models can do much more than only voice-over work. Speech-to-text can be used to support people with voicing problems or help users translate between languages in their own voices. It can also recreate the performances of classic vocalists, which match not only a song’s melody but the emotional expression behind the singing.

NVIDIA NeMo is an open-source Python toolkit for GPU-accelerated conversational AI. This toolkit empowers researchers, developers, and creators to start experimenting with and fine-tuning speech models for their own applications.

The APIs and pretrained models available in NeMo are easy to use. With this, researchers can develop and customize models for text-to-speech, natural language processing and real-time automated speech recognition.

Many of the models are trained with thousands of hours of audio data offered at NVIDIA DGX systems. Developers can use mixed-precision computing on NVIDIA Tensor Core GPUs to speed up the training and fine-tune any model for their use cases.

Models trained on Mozilla Common Voice, a dataset with almost 14,000 hours of crowd-sourced speech data in 76 languages, are also available through NGC. With the world’s largest open data speech dataset, the initiative, which NVIDIA supports, intends to democratize voice technology.

LATEST NEWS

WHAT'S TRENDING

Data Science

5 Imaginative Data Science Projects That Can Make Your Portfolio Stand Out

OCT 05, 2022

SOURCE: HTTPS://WWW.VERDICTFOODSERVICE.COM/

OCT 04, 2023

SOURCE: HTTPS://RESEARCH.AIMULTIPLE.COM/

JUL 12, 2023

SOURCE: HTTPS://RESEARCH.AIMULTIPLE.COM/

JUL 11, 2023

SOURCE: TECHCRUNCH.COM

OCT 27, 2022

SOURCE: THEHINDU.COM

OCT 16, 2022