White Castle to deploy voice-enabled digital signage in US

SOURCE: HTTPS://WWW.VERDICTFOODSERVICE.COM/

OCT 04, 2023

How AI technology can aid natural language processing deployment

SOURCE: EENEWSANALOG.COM

SEP 16, 2022

Natural language processing (NLP) is a spearhead for the use of artificial intelligence (AI).

NLP models, based on the ‘Transformer’ model architecture, are able to show human-like understanding and processing in a variety of applications. These models are solving problems such as analyzing huge texts, Q&A, chatbots, document search and more.

The models are set to play a key role in introducing the once-inconceivable, science-fictional, machine integration of these applications. Today’s NLP models are not just handling language-based problems but also extend to fields such as computer vision, medical technologies, genome sequencing and more, carrying with them the same potential for a revolution in these applications.

However, these NLP models require a change in AI deployment to enable integration of the models within the services, provide them with the appropriate neural network capacity and allow them to reach their full-scale potential. This is what we at NeuReality, together with our partners, are devoted to achieving with our holistic solution for AI inference deployment.

The Transformer model architecture provides a simple model that is highly scalable in all of its dimensions, with a significant breakthrough around the training side. Transformers only require semi-supervised training, allowing them to reach huge, scaled-up models trained on enormous datasets.

In order to deploy these NLP models in real-world applications, serving large numbers of users or transactions every day, it is necessary to consider the variability of the application in different market sectors with variability in the language models needed. As of today, it has led to two extremes in terms of deployment. One extreme, which is more commonly deployed today, is to train a model for every usage to get the required accuracy, creating a system with many purpose-built models. These models are big, but not outrageously big and not unmanageable, and they are used selectively, depending on the user application.

The other extreme approach is to train a single model on the entire dataset to achieve coverage for a wide range of usages and allow the model to grow continuously. To contain this complexity, developers create huge models, called foundation models, that capture wider purposes in one model. In deployment, these two extremes introduce very different system challenges to the underlying hardware and software infrastructure in use.

The challenges introduced by this new era of transformer-based NLP, can’t be served by today’s infrastructure. As a result, many of today’s NLP solutions look good on paper but undeployable at scale. In NeuReality we work with market leaders in NLP technology and usage and partner with them in developing and deploying system solutions that can address these challenges and overcome the barriers of today’s infrastructure, while addressing the underlying hardware infrastructure as well as the supporting software layers that are needed on top of it. NeuReality provides the AI system acceleration technology. The acceleration technology integrates and leverages the deep learning acceleration (DLA) capabilities of partners to provide the solution for the system aspects of the deployment.

The application and the deployment scenario define constraints on the computation of the NLP model. For example: chatbots require response times of the order of tens of milliseconds, the transcript is usually short – a few sentences, and the batching is low. Offline processing of documents can be less sensitive to latency, involve work on big transcripts, and allow batching, but will require handling of high capacity of data and data rates. A cloud application can aggregate AI from a lot of users, while needing to provide each of them with quality of service (QoS) and privacy, but this also accumulates additional latency to get to the AI resources. All of that requires adapting the deployment architecture in order to achieve efficiency in scale.

Today’s solutions are not handling the system deployment requirements well, especially with regards to optimized system cost and power. NLP models span a very wide range, from small BERT (Bidirectional Encoder Representations from Transformers) base, with hundreds of millions of parameters (requiring just a few gigabytes of memory), to trillions of parameters for the newest heavy lifting GPTs (Generative Pre-trained Transformers).

The performance required to compute such models ranges from tens of GOPs (Giga Operations) to hundreds of TOPs (Tera Operations) or POPs (Peta Operations) just for a single inference, way above the capacity of a single inference device, creating issues of inefficient compute, high latency, and problems switching between models.

This creates a major challenge to the memory subsystem of the DLA compute devices since it generates not just a huge capacity demand but also high bandwidth that stresses all the memory tiers used in the computations, from local caches and close memory tiers to the external memory of the DLA.

However, deployment challenges appear not just in their trivial form of only handling the compute and memory requirements of the models. Apparently, smaller NLP BERT-sized models are fitting cases of lower latency applications but do not play very well in being generic enough. As a result, multiple special models are required to handle applications. Applications are using these models for inferencing together, one after each other, in a random way. The problem now is not just inferencing these models, but also switching quickly between different models in real time, during inference operation, creating a whole new way of looking at the inference problem execution. Our team has developed several methodologies to do that very quickly.

On the other hand, huge models are stronger and more flexible in covering the span of applications’ needs. For huge models, enormous compute power, memory sizes, and I/O bandwidth spread on multiple computation nodes (accelerators and servers) are needed to run the model on every NLP request. We provide a smart breakdown of the execution between multiple devices through our toolchain software and runtime engine that will allow matching performance with reasonable latency. This activity is critical to the usage of these models in some of the real applications for NLP, such as chatbots or online Q&A, and requires efficient handling of all of this processing between multiple devices.

For the smaller models, when used in a multi-client deployment, we provide a low-latency, high-efficiency distribution system to select the right model to use and trigger its execution.

When working on real deployment of use cases, the focus is on making it easy to deploy as well as making it affordable and scalable. Methods such as compute disaggregation, on-the-fly orchestration and provisioning, alongside inter-nodes compute graph partitioning and communication, are all integrated into the system solution that makes this NLP-as-a-Service accessible to users from various market sectors.

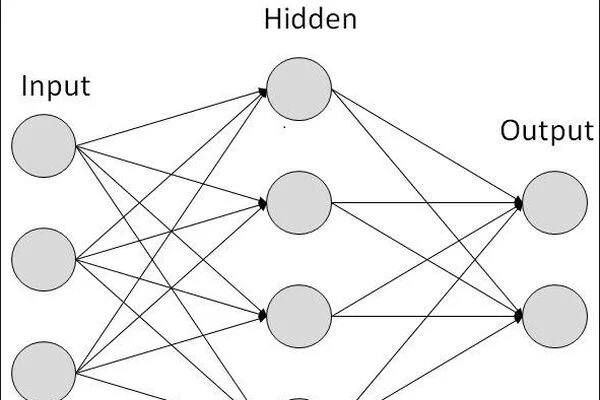

Figure 1: Multiple NLP model fast transition, runtime execution

NeuReality’s new AI-centric architecture, with its network attached Server-on-a-Chip, allows an efficient implementation of disaggregated AI inference. The heavy lifting AI nodes provide dedicated AI resources for NLP AI pipelines. They also allow linear scalability of these resources in a disaggregated architecture, to support the emerging demands of the NLP models among the different use cases.This solution enables efficient mapping of multiple models in devices and between devices, allowing them to send the inference request to the right device and load balance the AI load. At the same time, it ensures Quality of Service and Service Level Agreement policy enforcement, using its AIoF (AI over Fabric) protocol with its credit-based AI flow control. The DLA itself gets a fast, self-loading capability of models that allows it to respond quickly to model changes between different users.

Figure 2: Huge NLP model split between multiple NR1 chips, runtime execution

To handle large models, NeuReality is partitioning the model with its software tools, natively deploying huge models over multiple devices, while allowing efficient peer-to-peer traffic for parallel execution.

The company’s AIoF protocol is used to enable efficient partitioning of the AI jobs handling the large models. This action natively continues the execution of the pipeline in the AI hypervisor. It also reduces the device overheads in a way which ensures that latency of transmission is kept minimal and parallelized to the AI job execution. This is especially important for latency-critical applications when latency must be bounded.

AI natural language processing introduces significant deployment challenges for its specific model characteristics and use case deployment scenario. NeuReality partners with today’s market leaders and leading the evolution of AI NLP deployment at scale by resolving its system-level challenges and barriers. NeuReality introduce key technology IP that resolve the NLP-specific challenges covering its wide range of use cases, while providing performance and high usability with underlying AI centric hardware and user-experience oriented deployment software.

Lior Khermosh is CTO of NeuReality Ltd. and was previously co-founder and chief scientist with ParallelM. Before that Khermosh spent 12 years, and was a Fellow, with PMC-Sierra and was part of the founding team of Passave.

LATEST NEWS

WHAT'S TRENDING

Data Science

5 Imaginative Data Science Projects That Can Make Your Portfolio Stand Out

OCT 05, 2022

SOURCE: HTTPS://WWW.VERDICTFOODSERVICE.COM/

OCT 04, 2023

SOURCE: HTTPS://RESEARCH.AIMULTIPLE.COM/

JUL 12, 2023

SOURCE: HTTPS://RESEARCH.AIMULTIPLE.COM/

JUL 11, 2023

SOURCE: TECHCRUNCH.COM

OCT 27, 2022

SOURCE: THEHINDU.COM

OCT 16, 2022