AI language models could help diagnose schizophrenia

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

OCT 09, 2023

How AI is used for data access governance

SOURCE: ANALYTICSINDIAMAG.COM

JUN 20, 2022

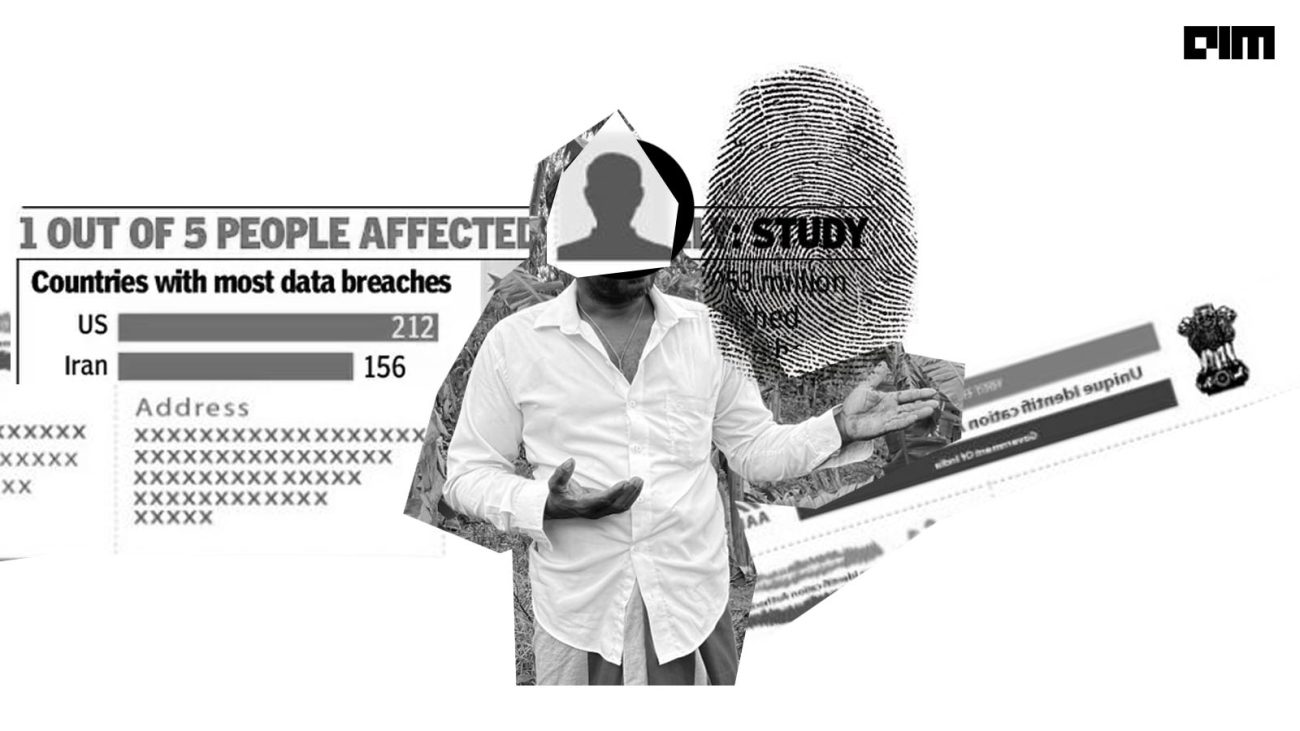

Atul Nair, a security researcher and volunteer at Kerala Police Cyberdome, found that the Aadhaar details of around 110 crore farmers have been leaked from the PM Kisan website.

According to the 2021 Data Risk Report Financial Services, a financial services employee has access to 13 percent of the company’s total files on an average. Meaning, employees can modify over half a million files, including almost 20% of files containing sensitive data. Further, the exposure of files doubles with the increase in company size. On average, the largest financial services organisations have over 20 million files open to every employee.

Governments and businesses worldwide are taking measures to protect sensitive data. While governments are working on the legislation and policy front, enterprises are working to deploy the latest technologies and processes to address cyber security threats.

Cybersecurity firms are gradually moving towards data access governance to help organisations deal with data breaches. Data access governance is an approach for defining how an organisation manages and controls who has access to what data assets, both internally and externally. It encompasses people, processes, and technologies required to manage and protect data.

With the help of data access governance, data security firms are helping enterprises gain visibility into sensitive unstructured data across the organisation and enforce policies controlling access to that data.

“We first help organisations understand what information is there in those data stores–whether it is sensitive, and if it is sensitive, is it intellectual property, is it design, is it sensitive SCADA information, is it sensitive corporate information etc. So we would be able to help organisations get that visibility,” said Maheswaran S, Country Manager, South Asia, Varonis. “We just don’t provide visibility, we also make the intelligence actionable,” he added.

Blast radius is a way of measuring the total impact of a potential security breach. “In a 10,000+ user organisation, each user gets access to at least an average of 10+ million files. But only 5 percent would be required for users. Organisations usually don’t validate whether users need access to them or not,” said Maheswaran.

AI and ML have become critical in dealing with cybersecurity threats. These technologies can swiftly analyse millions of data sets and track down various cyber threats — from malware menaces to phishing attacks.

“We have leveraged a lot of AI and machine learning to look for deviations in normal users’ data access behaviour. When we see such behaviour, we alert and prevent that user from accessing the data,” said Maheswaran.

AI and ML enable data security firms to combine several data access incidents like authentication telemetry and perimeter telemetry and establish behavioural patterns of the data users to provide inputs to clients based on these patterns.

Threat detection and prediction

AI systems can predict how and where a compromise is most likely, so the organisations can plan and allocate resources to deal with vulnerabilities. By using sophisticated algorithms, AI systems can detect malware, run pattern recognition, and detect malware or ransomware attacks before it enters the system. ML can help pull data from an attack to be immediately grouped and prepared for analysis and can provide cybersecurity teams with simplified reports to make processing and decision-making easy.

System configuration

Manual processes to assess configuration security are painstakingly difficult. AI and ML can automate alerts in the event of suspicious activity and security teams could get advice on options for proceeding, or even have systems in place to automatically adjust settings as needed. Also, responsive tools can help teams find and mitigate issues as network systems are replaced, modified, and updated.

Moreover, individually setting up endpoint machines of an organisation is time-consuming. Even after the initial setup, IT teams find themselves revisiting the same machines later to adjust configurations or outdated setups. Here, AI and ML-based systems can be of great help and address the issue with minimal delay.

Adaptability

Humans cannot often customise their skill set to the specialised requirements of the organisation, leading to downtimes. However, AI and ML provide customised solutions to boost adaptability.

Data interpretation

Machine learning excels at monotonous tasks like identifying data patterns where humans are not so effective. Machine learning can render data in a readable, and ready for interpretation.

Predictive forecasting

AI and ML systems can evaluate existing datasets with natural language processing and predict potential outcomes. Predictive forecasting is paramount in building threat models.

Recommends courses of action

ML helps in recommending courses of action based on behaviour patterns. In addition, AI helps crunch staggering amounts of data and enables cybersecurity teams to adapt their strategy to a continually altering landscape.

Data categorisation and data clustering

AI and ML help in categorising data points, taking outliers, and placing them into clustered data sets. These clustered data sets can help determine how an attack happened. Labelling data points helps in building a profile on attacks and vulnerabilities.

Identifying bots

AI and machine learning help understand website traffic and distinguish between good bots (like search engine bots) and bad bots.

Enables working with less staff

AI-based security tools help organisations work with less staff while simultaneously supporting the staff in various tasks that cumulatively help in cost and time savings.

Organisations need substantially more resources and financial investments to build and maintain sophisticated AI systems. Moreover, as AI systems are trained using data sets, organisations need diverse data sets to build threat models. Acquiring these data sets is time-consuming, requires huge investments, and may go against data privacy laws.

Possible solutions could be keeping data policies up-to-date and adopting federated learning to address the issue of data privacy. In federated learning, an algorithm is trained across multiple servers with local datasets without sharing sensitive data.

LATEST NEWS

WHAT'S TRENDING

Data Science

5 Imaginative Data Science Projects That Can Make Your Portfolio Stand Out

OCT 05, 2022

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

OCT 09, 2023

SOURCE: HTTPS://WWW.THEROBOTREPORT.COM/

SEP 30, 2023

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 08, 2023

SOURCE: HOUSTON.INNOVATIONMAP.COM

OCT 03, 2022

SOURCE: MEDCITYNEWS.COM

OCT 06, 2022