CMU Researchers Introduce the Open Whisper-Style Speech Model: Advancing Open-Source Solutions for Efficient and Transparent Speech Recognition Training

SOURCE: HTTPS://WWW.MARKTECHPOST.COM/

OCT 03, 2023

Deepmind Introduces ‘AlphaCode’: A Code Generation System With Advanced Machine Learning Applied To Solving Competitive Programming Problems

SOURCE: MARKTECHPOST.COM

FEB 04, 2022

Computer programming has become a general-purpose problem-solving tool in our daily lives, industries, and research centers. Yet, it has been proven difficult to incorporate AI breakthroughs to developing systems to make programming more efficient and accessible. Large-scale language models have recently exhibited a remarkable ability to create code and complete simple programming tasks. However, these models perform poorly when tested on more difficult, unknown issues that need problem-solving skills beyond translating instructions into code.

Creating code that performs a specified goal necessitates searching through a large structured space of programs with a sparse reward signal. That is why competitive programming tasks require knowledge of algorithms and complicated natural language, which remain highly difficult.

Large transformer models can attain low single-digit solve rates in early work employing program synthesis for competitive programming. However, they can’t reliably provide solutions for the vast majority of problems. Furthermore, insufficient test cases in existing competitive programming datasets make the metrics unreliable for measuring research progress.

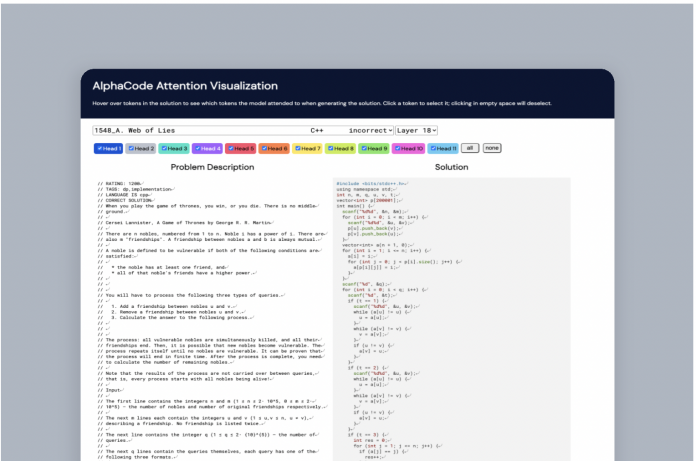

To that end, DeepMind’s team has introduced AlphaCode, a system for writing competitive computer programs. AlphaCode generates code unprecedentedly using transformer-based language models and then intelligently filters to a small group of interesting programs. By tackling new challenges that involve a combination of critical thinking, logic, algorithms, code, and natural language interpretation, AlphaCode ranked in the top 54 percent of competitors in programming competitions.

All of the models used are pre-trained on GitHub’s open-source code that included code files from several popular languages: C++, C#, Go, Java, JavaScript, to name a few. Then, they were fine-tuned on a dataset of programming competition dataset CodeContests. This dataset gathers data from various sources, splits it temporally so that all training data predates all evaluation issues, includes additional generated tests to check correctness, and evaluates submissions in a competitive programming environment.

The team describes the competitive programming code generation problem as a sequence-to-sequence translation task, which produces a corresponding solution Y in a programming language when given a problem description X in natural language. This perception motivated them to use an encoder-decoder transformer architecture for AlphaCode, which models. The problem description X is fed into the encoder as a flat series of letters by the architecture (including metadata, tokenized). It samples Y autoregressively from the decoder one token at a time until it reaches the end of the code token, at which point the code can be built and run.

Source: https://storage.googleapis.com/deepmind-media/AlphaCode/competition_level_code_generation_with_alphacode.pdf

An encoder-decoder design provides bidirectional description representation (tokens at the beginning of the description can attend to tokens at the end). It also offers more flexibility to separate the encoder and decoder structures. The researchers also discovered that utilizing a shallow encoder and a deep decoder enhances training efficiency without negatively impacting problem solution rates.

Follow the below steps while using AlphaCode:

The researchers evaluated their model using many C++ and Python programs for each challenge. Further, they filtered, clustered, and reranked the resulting solutions down to a small group of 10 candidate programs for external evaluation. They collaborated with Codeforces and tested AlphaCode by replicating participation in ten recent contests. This automated system replaces rivals’ trial-and-error debugging, compilation, testing, and submission processes.

Paper: https://storage.googleapis.com/deepmind-media/AlphaCode/competition_level_code_generation_with_alphacode.pdf

Reference: https://deepmind.com/blog/article/Competitive-programming-with-AlphaCode

LATEST NEWS

Augmented Reality

Hi-tech smart glasses connecting rural and remote aged care residents to clinicians

NOV 20, 2023

WHAT'S TRENDING

Data Science

5 Imaginative Data Science Projects That Can Make Your Portfolio Stand Out

OCT 05, 2022

SOURCE: HTTPS://WWW.MARKTECHPOST.COM/

OCT 03, 2023

SOURCE: HTTPS://NEWS.MIT.EDU/

AUG 17, 2023

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 21, 2023

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 17, 2023

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 07, 2023

SOURCE: HTTPS://WWW.INDIATODAY.IN/TECHNOLOGY/NEWS/STORY/69-MILLION-GLOBAL-JOBS-TO-BE-CREATED-IN-NEXT-FIVE-YEARS-AI-AND-MACHINE-LEARNING-ROLES-TO-GROW-IN-INDIA-2367326-2023-05-02

JUN 28, 2023