Hybrid AI-powered computer vision combines physics and big data

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 14, 2023

Deep learning for computer vision: A brief history and key trends

SOURCE: ANALYTICSINDIAMAG.COM

SEP 27, 2021

Until 2005, we did not have a lot of data to create these algorithms or to tell them if they are working well.

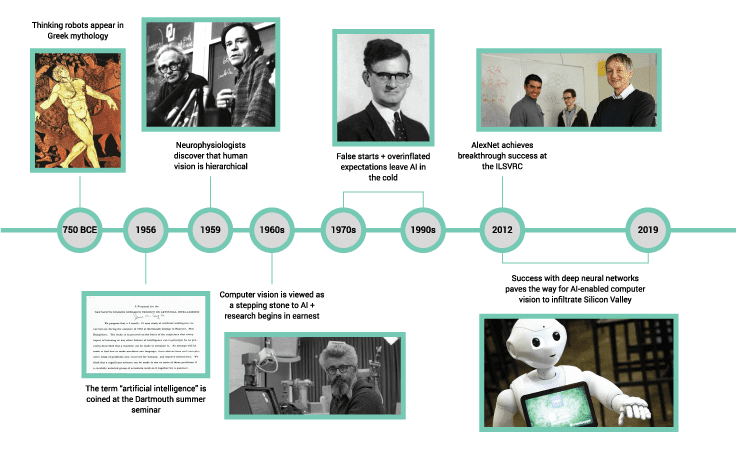

Knowing the history of artificial intelligence not only informs our understanding of the past and honours its progress but sets a course for future discovery and advancements. At the ‘Deep Learning DevCon 2021,’ Angshuman Gosh, head of data science at Sony Research India, spoke about the brief history of computer vision and how deep learning has helped bring a lot of innovation and progress. He also discussed various use cases, trends and applications of computer vision, GAN, etc.

With 12+ years of experience in leading technology, media, and retail companies like Disney, Target, Grab, and Wipro, Gosh currently heads the data science team at Sony Research India. He is also an official member and contributor at the Forbes Technology Council and a visiting professor at top IITs, IIMs, and XLRI.

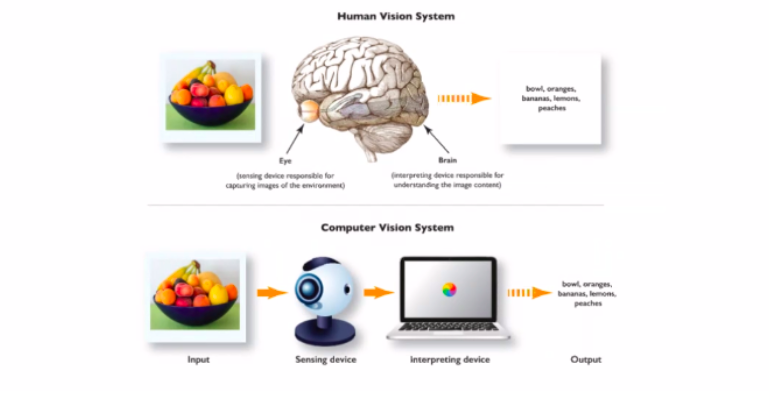

Computer vision is an area of artificial intelligence (AI) that enables systems to derive meaningful information from images, videos and other visual inputs — and take actions or make recommendations based on that information. “In brief, computer vision tries to emulate human vision using computers and the power of AI,” said Gosh, stating that computer vision has made great strides, but it still has a long way to go.

(Source: Angshuman Gosh | DLDC 2021)

According to Gosh, the computer vision industry is about $11 billion, and in the next five to six years, it is expected to touch $25 billion. Further, he said that there are many applications within computer vision (autonomous driving vehicles, drones, and robotics), and those are not included here. “If we include those, the market size will probably be much more than this,” he added.

(Source: Angshuman Gosh | DLDC 2021)

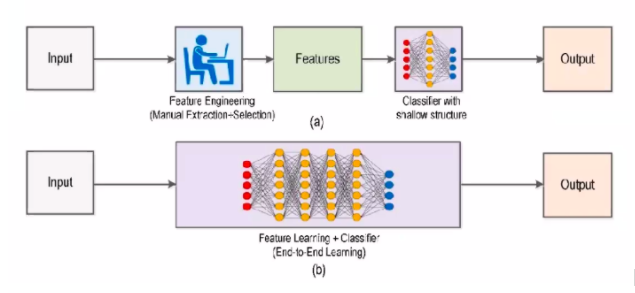

Giving us a comparison between computer vision then and now, Gosh said a lot of work was manual inspection and selection of features. “But, now we don’t need to select the feature manually. Instead, the model can learn the feature from the image or the video, and it can process it and give output,” he added.

Computer vision; old (a) vs new (b) (Source: Angshuman Gosh | DLDC 2021)

“Until 2005, we did not have a lot of data to create these algorithms or to tell them if they are working well,” said Gosh. But, in 2009, everything changed, as many US institutions and industry players came together to create a massive dataset called ImageNet.

The dataset included close to 1000 object classes, including aeroplane, automobile, bird, cat, deer, dog, frog, horse, ship, truck, etc. “With this, the problem was solved, and we had a massive dataset to identify whether the model is working or not,” he added.

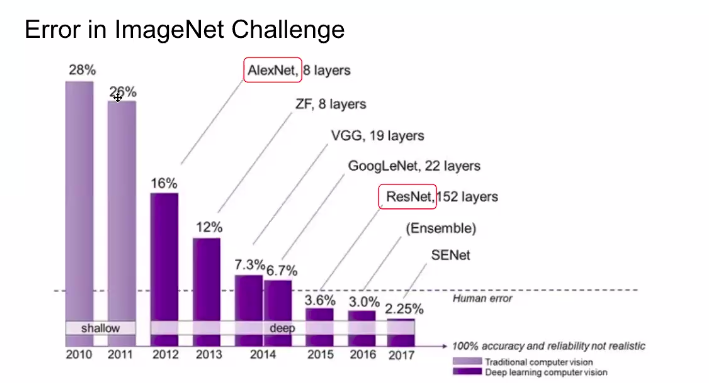

Citing ImageNet Kaggle challenge, Gosh said that the error rate in ImageNet challenge had reduced significantly over the years, thanks to the introduction of models like AlexNet, which reduced the error rate by 16 per cent in 2012, followed by ZF (12 per cent), VGG (7.3 per cent), GoogleNet (6.7 per cent), ResNet (3.6 per cent), Ensemble (3 per cent), SENet (2.25 per cent), etc.

“2012 onwards, people started using convolutional neural networks (CNNs), and did some adjustments that reduced the error significantly”, said Gosh. He said, before this, people relied on shallow neural networks, where the error rate was close to 30 per cent.

(Source: Angshuman Gosh | DLDC 2021)

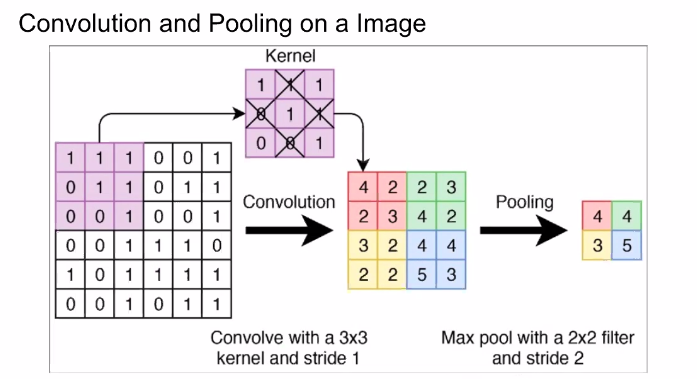

AlexNet was one of the first key models to become popular in computer vision. Explaining the science behind AlexNet from basics, Gosh said it is essential to know two key concepts — convolutional neural network and pooling on an image.

“The fundamental concept here is that you have a filter, which is smaller than the size of the image, and you slide the filter across the image at every point of time, across the pixel. That creates an output of the convolution. Once you have convolution, you can use multiple filters to create multiple outputs,” explained Gosh.

(Source: Angshuman Gosh | DLDC 2021)

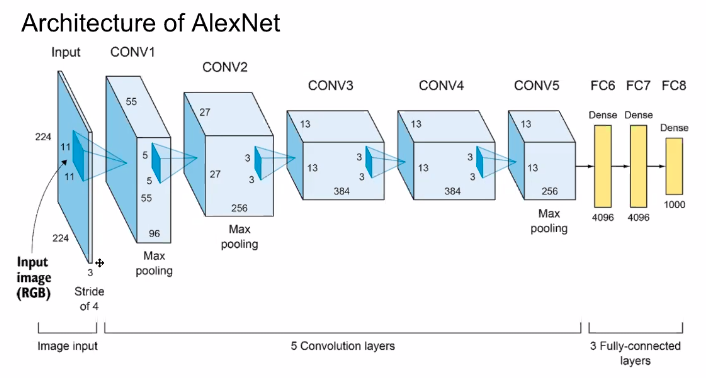

AlexNet contains close to eight layers of convolution and pooling filters (as shown below) — five convolution layers and three fully-connected layers, which helps identify the object. On the other hand, a model like ResNet has about 152 layers to successfully identify or make sense of the object.

(Source: Angshuman Gosh | DLDC 2021)

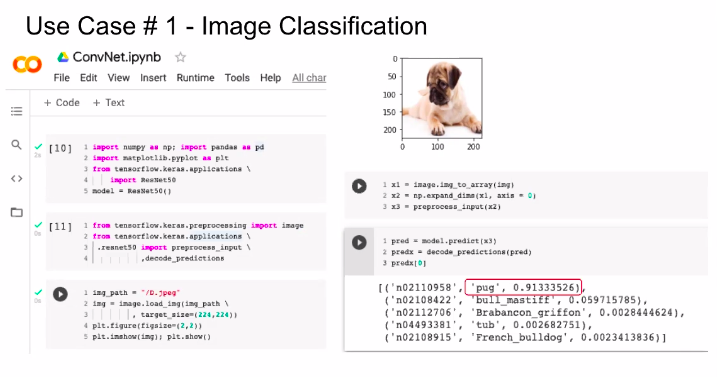

Showing the example of image classification, Gosh explained how the ResNet model was able to recognise the image (pug) with higher accuracy. The source code of ResNet is available on GitHub.

(Source: Angshuman Gosh | DLDC 2021)

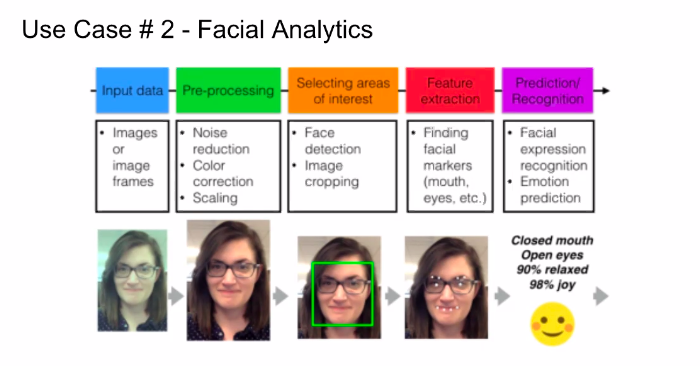

Further, he showed another use case around spatial analytics and said that they use an advanced model that helps in understanding emotions, face detection, surveillance, etc.

The image below shows a step-by-step process to build a facial analytics model.

(Source: Angshuman Gosh | DLDC 2021)

Some of the popular use cases of computer vision include:

(Source: Angshuman Gosh | DLDC 2021)

Besides this, other applications of computer vision include:

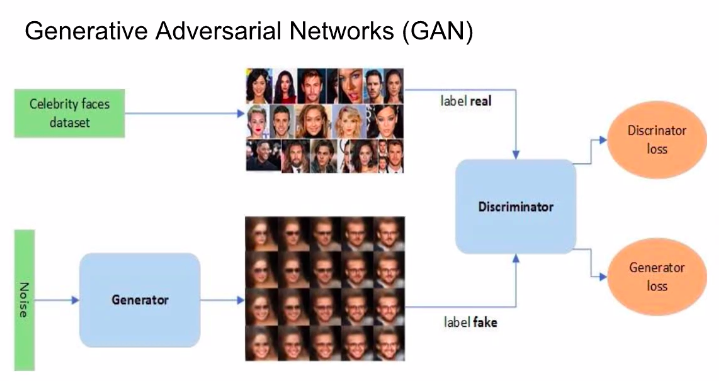

GAN, or generative adversarial network, is a class of machine learning framework — also a popular application of computer vision — designed by Ian Goodfellow and his colleagues in 2014. Compared to neural networks like ResNet or AlexNet, which try to understand the image, GAN creates an image that doesn’t exist. So, how does this kind of network work?

Gosh said two different neural networks are working here. Interestingly, they are working against each other — one network consists of real images, while the other consists of fake images created by the generator model. Plus, it includes a discriminator, which learns from the real image, and compares the real images from fake images. “Once you repeat this process several times, you can run multiple epochs — forward, backward passes, etc. After a point of time, the generator starts creating really good images which look very realistic, and discriminator can only be able to identify if the image is real or fake,” said Gosh.

(Source: Angshuman Gosh | DLDC 2021)

One of the popular applications of GAN is deep fakes. Deep fakes are synthetic media in which a person in an existing video or image is replaced with someone else’s likeness. Gosh said that Alexander Amini and his colleagues at MIT and Harvard University have developed a neural network model to synthesise celebrities.

The below image shows the deep fakes video of former US President Barack Obama, which Amini unveiled at his introductory course on deep learning in 2020.

(Source: Angshuman Gosh | DLDC 2021)

Some of the notable applications of GAN include:

The future of GANs looks bright. Besides these applications, there are several areas in which it can be used, particularly in creating infographics from the text, website designs, data compression, drug discovery and development, generating text, music, art, etc.

LATEST NEWS

WHAT'S TRENDING

Data Science

5 Imaginative Data Science Projects That Can Make Your Portfolio Stand Out

OCT 05, 2022

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 14, 2023

SOURCE: INDIANEXPRESS.COM

OCT 24, 2022