Eerily realistic: Microsoft’s new AI model makes images talk, sing

SOURCE: INTERESTINGENGINEERING.COM

APR 20, 2024

ChatGPT: the stunningly simple key to the emergence of understanding

SOURCE: PAUL PALLAGHY II HTTPS://MEDIUM.COM/@PAUL.K.PALLAGHY/CHATGPT-THE-STUNNINGLY-SIMPLE-KEY-TO-THE-EMERGENCE-OF-UNDERSTANDING-41FCF7F67BA7

DEC 21, 2022

We all are wondering how ChatGPT can understand us so well — and generate reams of impressively as-per-ordered text — given that it is only trained to predict the next word.

Of course it helps to look like you’re understanding if you can predict the next word in sequence decently.

But GPT continues to ‘predict’, let’s say, create, the sentences and paragraphs so well that not only is the generated text syntactically and grammatically correct but the output reveals an uncanny ability of GPT to appear to precisely understand your query and/or reference text. And the internet training.

How does ChatGPT / GPT-3 do that?

Is it just that it’s seen just about every sentence pattern that exists?

Hierarchy and combination

The simple answer is . . no . . the key is how ChatGPT handles hierarchy, that is, the different levels of structure in your query, prompt or reference document.

And the handling of the combination of these levels.

Not to mention the occurrences and combinations of the nestings of meanings in all the terabytes of training text.

So, although the training data needs to include examples that illustrate the multiple meanings and contexts of every word, it does not need to see every possible combination of them.

Fortunately!

The combinations would be horrendous.

I have seen this to be the case in text-based neural networks in much of my day-to-day work with NLU (natural language understanding) and neural (network) machine translation (between human languages).

So we can process a simple sentence into a hierarchy as follows:

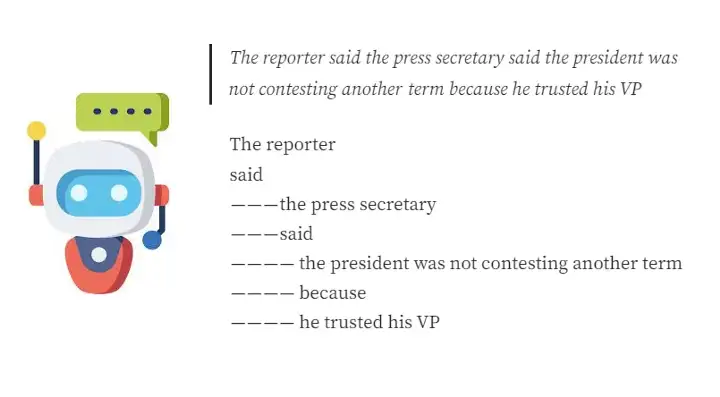

The reporter said the press secretary said the president was not contesting another term because he trusted his VP

The reporter

said

— the press secretary

— said

—— the president was not contesting another term

—— because

—— he trusted his VP

Notice that we humans naturally — without consciously thinking — break the sentence into a hierarchy — or nesting — to understand what each person actually said in this case. What the reporter said. What the press secretary said.

And, critically, that the effect (the president not contesting) had nothing to do with either the reporter or the press secretary. Disentangling hierarchy was crucial to us understanding the proper use of the word ‘because’ here.

This means two things

My key point is number 2: GPT, or neural networks in general, understand your text even if it hasn’t seen that combination before.

Just like humans.

Otherwise GPT, or Google Translate (neural network-based) wouldn’t do as well as they do.

So, instead, neural networks. including ChatGPT, are good at building small sub-networks that understand each part of a sentence and, distinct sub-networks presumably understand how sentences can be endlessly, hierarchically put together (e.g. a typical long Wikipedia sentence can be deconstructed into a dozen or more levels).

Understanding and logic

On top of that, it’s probably the same for every type of logic and concept and nature of the world that GPT learns.

The mix and match of these concepts and logic is not critical as long as it can ‘deconvolute’ (disentangle) the meaning and nature of these world concepts and forms of logic whether it be e.g. jealousy / irony / humor or inference / correlation / cause-effect.

It does not need to see every combination.

Instead GPT learns what and / or /not / except / instead / because / if and so on, means.

And how to put them together logically, without having seen every combination.

Not just regurgitating

So GPT is not just parroting or regurgitating examples it’s seen. It’s applying what it has learned to your unique situation.

Just as humans do.

That way, GPT really does look as if it understands, and for many applications it’s as good as (and maybe even little different to) human-style understanding. After all, it usually, successfully, understands your input and the training data sufficiently to synthesize compelling, logical output.

It’s understanding is frequently logical (but not always perfect), even distinguishing cause and effect, despite the underlying neurons being purely correlative — pattern matching — only.

At a deep level GPT sub-networks are firing.

They’re detecting:

That’s the cause!

That’s the effect.

That’s irony.

He’s the murderer!

That was funny.

A and B but not C so . . D

Did I mention that there’s a big academic debate going on over whether (1) ChatGPT can do understanding at all (2) in principle even or (3) whether AI is actually about artificial cognition or not?

LOL.

Of course it’s ‘yes’ to all three.

ChatGPT is probably more like our brains than we’d like to think. The brain is more like an artificial neural network than a mathematically precise symbolic AI algorithm.

LATEST NEWS

Artificial Intelligence

Eerily realistic: Microsoft’s new AI model makes images talk, sing

APR 20, 2024

WHAT'S TRENDING

Data Science

5 Imaginative Data Science Projects That Can Make Your Portfolio Stand Out

OCT 05, 2022

SOURCE: INTERESTINGENGINEERING.COM

APR 20, 2024

SOURCE: ARTIFICIALINTELLIGENCE-NEWS.COM

APR 17, 2024

SOURCE: HTTPS://CODEBLUE.GALENCENTRE.ORG/

NOV 06, 2023

SOURCE: HTTPS://WWW.NEWS-MEDICAL.NET/

NOV 06, 2023

SOURCE: HTTPS://WWW.WIRED.COM/

NOV 05, 2023

SOURCE: HTTPS://WWW.BUSINESSOUTREACH.IN/

OCT 31, 2023