AI language models could help diagnose schizophrenia

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

OCT 09, 2023

A Hands-On Guide to SwinIR: A Transformer for Image Restoration

SOURCE: ANALYTICSINDIAMAG.COM

DEC 08, 2021

The various transformer designs have become the x-factor for a variety of complicated natural language processing and computer vision applications. In contrast to vision or visuals, you may have come upon a hazy image that signifies a lot to you. Various transformer-based techniques are available to restore such images, however, nearly all do not produce the desired results. So, in this post, we’ll talk about how to restore or reconstruct an image using the SwinIR transformer. The following are the main points to be discussed in this article.

Let’s start the discussion by understanding what is Image restoration.

What is Image Restoration?

Image restoration techniques such as image super-resolution (SR), image denoising, and JPEG compression artefact reduction strive to recreate a high-quality clean image from a low-quality degraded image. Image restoration is the process of estimating the clean, original image from a corrupted/noisy image.

Motion blur, noise, and camera misfocus are all examples of corruption. Image restoration is accomplished by reversing the blurring process, which is accomplished by imaging a point source and using the point source picture, also known as the Point Spread Function (PSF), to recover the image information lost during the blurring process.

Methods for Image Restoration

Convolutional neural networks (CNN) have become the major workhorse for picture restoration since numerous groundbreaking studies. The majority of CNN-based approaches place a premium on complex architecture designs like residual learning and dense connections. Although the performance is substantially better than traditional model-based techniques, they are nevertheless plagued by two major flaws in the fundamental convolution layer.

First, the substance of the interactions between pictures and convolution kernels is irrelevant. It’s possible that using the same convolution kernel to restore different image sections isn’t the ideal solution. Second, convolution is ineffective for long-range dependency modelling due to the notion of local processing.

Transformer, in contrast to CNN, uses a self-attention mechanism to capture global interactions across contexts and has demonstrated promising results in a variety of visual difficulties. However, image restoration vision Transformers divide the input image into patches of a predefined size (e.g., 48×48) and analyze each patch separately.

Two disadvantages necessarily arise as a result of such a technique. For starters, border pixels can’t use neighbouring pixels that aren’t in the patch to restore the image. Second, border artefacts around each patch may be introduced by the restored image. While patch overlapping can help solve this problem, it also adds to the computational load.

How does SwinIR Restore the Images?

SwinIR Transformer has shown a lot of promise because it combines the benefits of CNN and Transformer. On the one hand, because of the local attention mechanism, it has the benefit of CNN in processing images of huge size and leveraging the shifted window design, it has the advantage of Transformer in modeling long-range dependency. SwinIR is a Swin Transformer-based product.

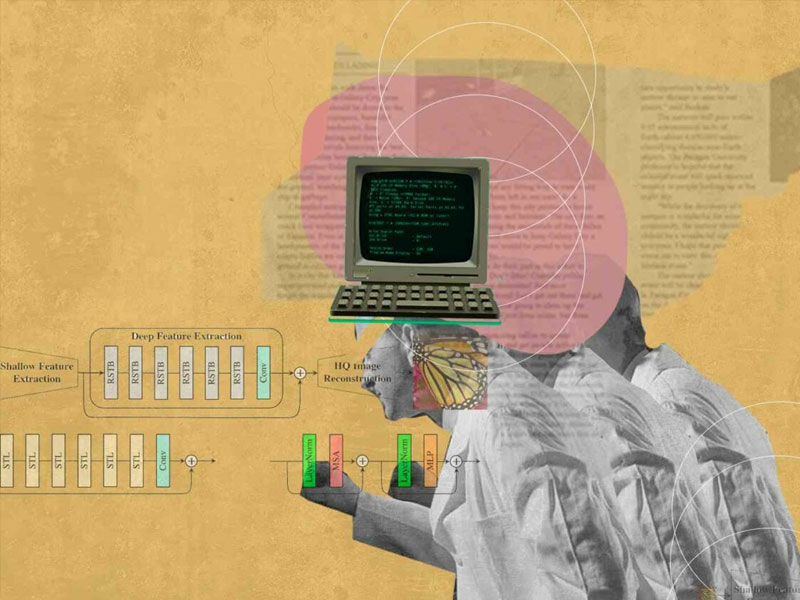

SwinIR is made up of three different modules: shallow feature extraction, deep feature extraction, and high-quality image reconstruction. To preserve low-frequency information, the shallow feature extraction module employs a convolution layer to extract shallow features, which are then immediately transferred to the reconstruction module.

The residual Swin Transformer blocks (RSTB) that make up the deep feature extraction module use many Swin Transformer layers for local attention and cross-window interaction. In addition, we use a residual connection to give a shortcut for feature aggregation and add a convolution layer at the conclusion of the block for feature augmentation. Finally, the reconstruction module fuses shallow and deep features for high-quality image reconstruction.

SwinIR has various advantages over common CNN-based image restoration models: Interactions between visual content and attention weights that are content-based and can be viewed as spatially varying convolution. The shifted window approach allows for long-range dependence modelling. Better results with fewer parameters

SwinIR is made up of three modules: shallow feature extraction, deep feature extraction, and high-quality (HQ) image reconstruction (shown in the diagram below). For all restoration jobs, it uses the same feature extraction modules, but distinct reconstruction modules for various tasks.

When we provide a low-quality input image, it employs a three-layer convolutional layer to extract shallow features. The convolution layer excels in early visual processing, resulting in more stable optimization and improved results. It also gives a straightforward method for mapping the input picture space to a higher dimensional feature space. Shallow features mostly contain low-frequency content, whereas deep features concentrate on restoring lost high-frequency content.

Through this post, we have seen an overview of the Image reconstruction technique. Specifically, we have discussed what is image restoration, how it is evolved and addressed by various techniques such as CNN, Transformer, etc. In contrast to the transformer, we have seen a Working of SwinIR theoretically and practically it has shown how it outperforms real-ESRGAN. Results of SwinIR and BSRGAN are relatively close to each other.

LATEST NEWS

WHAT'S TRENDING

Data Science

5 Imaginative Data Science Projects That Can Make Your Portfolio Stand Out

OCT 05, 2022

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

OCT 09, 2023

SOURCE: HTTPS://WWW.THEROBOTREPORT.COM/

SEP 30, 2023

SOURCE: HTTPS://WWW.SCIENCEDAILY.COM/

AUG 08, 2023

SOURCE: HOUSTON.INNOVATIONMAP.COM

OCT 03, 2022

SOURCE: MEDCITYNEWS.COM

OCT 06, 2022